WHAT

Loading into Snowflake can be done in multiple ways –

- Bulk loading from Snowflake stages (internal and external)

- Using ETL/Data Integration tools like Matillion, Informatica, etc.

- Continuous loading using “Snowpipe”

Snowpipe is a serverless function provided by Snowflake that enables near real time ingestion of data into Snowflake. It helps loading frequent micro-batches of data from stage into Snowflake without having to manually trigger a COPY command.

WHY

Although at a 50,000 ft level it may simply seem like a COPY command that is set to trigger every time a file arrives in stage, there are a few key things that stand out –

- Serverless

- Micro batches

- Frequent

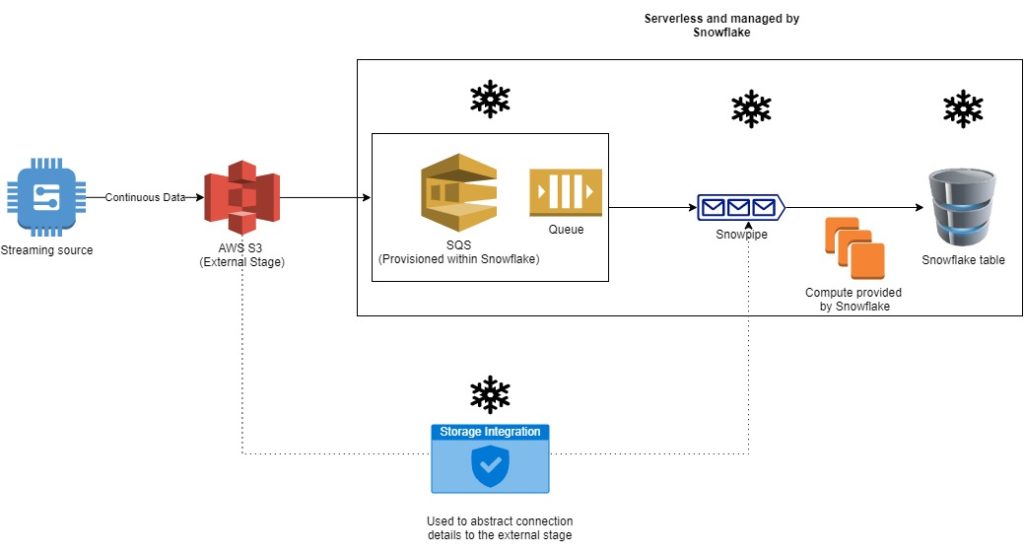

What this means is that, unlike a regular bulk load where the user is expected to plan and provision a warehouse for the load, Snowpipe’s compute is provisioned and scaled automatically by Snowflake.

Snowflake also takes it a step further by creating an SQS queue for you within the Snowflake account. This allows you to create Event Notifications on your S3 bucket that can be sent to this SQS queue which is polled by your Snowpipe.

HOW

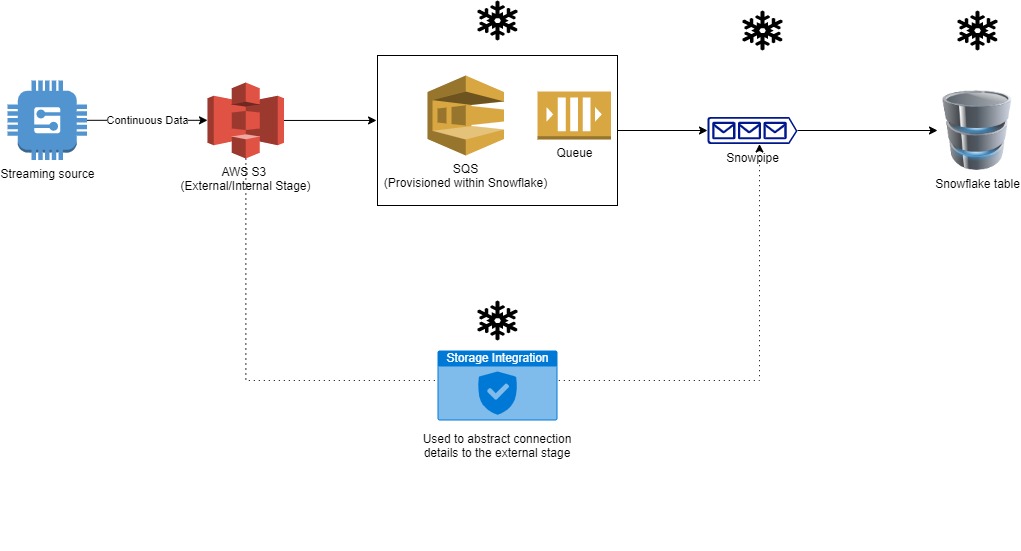

The architecture below gives a bird’s eye view of where the Snowpipe fits and what role it performs.

If you want to learn more about Storage Integration in Snowflake, head over to the article below

Syntax

CREATE [ OR REPLACE ] PIPE [ IF NOT EXISTS ] <name>

[ AUTO_INGEST = [ TRUE | FALSE ] ]

[ AWS_SNS_TOPIC = <string> ]

[ COMMENT = '<string_literal>' ]

AS <copy_statement>Example

create or replace pipe helloworld_db.public.hello_world_pipe

auto_ingest=true as

copy into members

from @hello_world_stage

file_format = helloworld_db.public.hello_world_ff;Setting AUTO_INGEST=TRUE ensures that Snowpipe will automatically read data from the external stage using SQS. If you set it to false, you will need to use Snowpipe’s REST API endpoints to ingest the data. You’d typically use this option when working with internal stages.

The AWS_SNS_TOPIC parameter comes handy when you wrap SQS messages in an SNS topic. Why, did you ask?

AWS S3 doesn’t allow you to configure multiple event notifications for the same path. If you want to use the same path for different events, you can choose to send it to send the event to an SNS topic instead. You can then subscribe the Snowflake SQS to the SNS topic. I will cover this in a different article.

Wait….There’s More

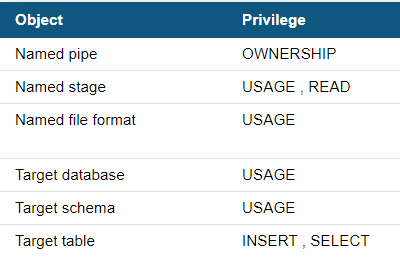

Always ensure that you create a separate user to own all Snowpipes. This user should be assigned a role that has the following privileges –

Also make this role the default role for the Snowpipe user.

Finally, you have to configure the event notifications on your S3 bucket. First, grab the ARN for the SQS queue that Snowflake created for you using the command –

show pipes;Use the ARN value under notification_channel as the destination for your event notification under the S3 bucket.

Some final comments –

It is quite possible that your Snowpipe doesn’t work properly at the first go. The reason could be because your stage isn’t setup properly or you don’t have the right permissions in place. The command to find the current status of the Snowpipe is –

select SYSTEM$PIPE_STATUS('HELLO_WORLD_PIPE' );If you want to load data from files in the stage that were placed there before the pipe was created, simply use –

ALTER PIPE HELLO_WORLD_PIPE REFRESH;Also, before you alter your Snowpipe, always remember to PAUSE it using

ALTER PIPE HELLO_WORLD_PIPE SET PIPE_EXECUTION_PAUSED=TRUE;